Retrieving AKS on Azure Local admin credentials

I was asked a question recently how could a customer connect to an Azure Local hosted AKS instance via kubectl, so they could use locally hosted Argo CD pipelines to manage their containerized workloads (e.g. via Helm charts). The reason for the question is that the Microsoft documentation doesn’t make it clear how to do it. E.g. The How To Create a Kubernetes Cluster using Azure CLI shows that once you’ve created a cluster, you use the az connectedk8s proxy azure cli command to create a proxy connection allowing kubectl commands to be run.

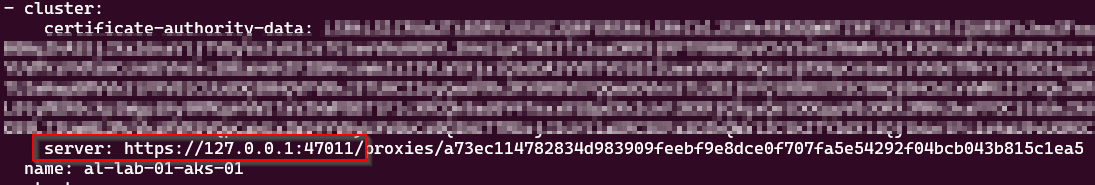

That’s fine if you’re doing interactive sessions, such as running the az cli proxy command in one shell, and in another running kubectl, but once the proxy session is closed, the kube config is useless, as it looks something like this:

If you try and run a kubectl when the proxy session is closed, it will fail. Editing the config file with the URL with one of the IP addresses of the control nodes and port 6443 doesn’t work either as the client certificate is not provided.

To get the correct config we have 3 options:

Use Azure CLI to retrieve the admin credentials

SSH to one of the AKS control-plane nodes and retrieve the admin.conf file

Run a debug container on one of the control-plane nodes and retrieve the admin.conf file

I’ll go through all 3 options, starting with the easiest

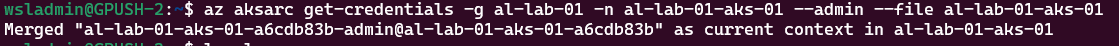

Using Azure CLI

Assuming you’re already logged in to the correct Azure tenant, you can use a single command to get the admin credentials:

az aksarc get-credentials --resource-group <myResourceGroup> --name <myAKSCluster> --admin --file <myclusterconf>A word of caution when running the command; if you don’t supply the —file parameter, it merges the config into the default ~/.kube/config file. If you want to use the credentials for automation purposes, it’s best to have them in a separate file, otherwise there could be a security risk.

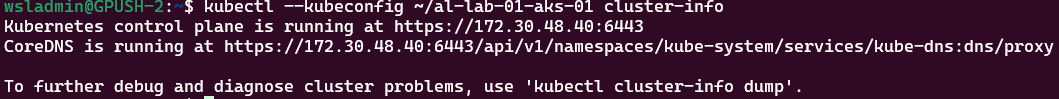

Here we’re proving that we’re using the accessible URL via the config file that’s just been created to query the API for the cluster info:

Using SSH connecting to control-plane node

The second option is to SSH to one of the control-plane nodes to retrieve the details. This is a bit more involved and assumes you have the Private SSH key file, assuming you provided it at deployment time, or retrieved the one created for you. You will also need to know the IP address of one of the nodes, and there’s no way to get this from the Azure portal, so will have to use kubectl .

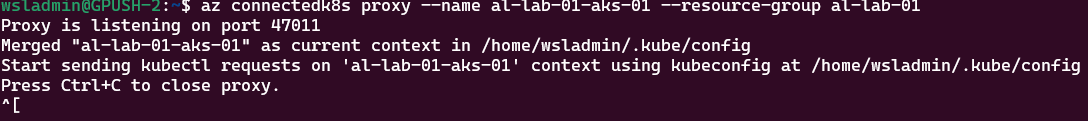

az connectedk8s proxy --name <myResourceGroup> --resource-group <myAKSCLuster>From a new shell, run:

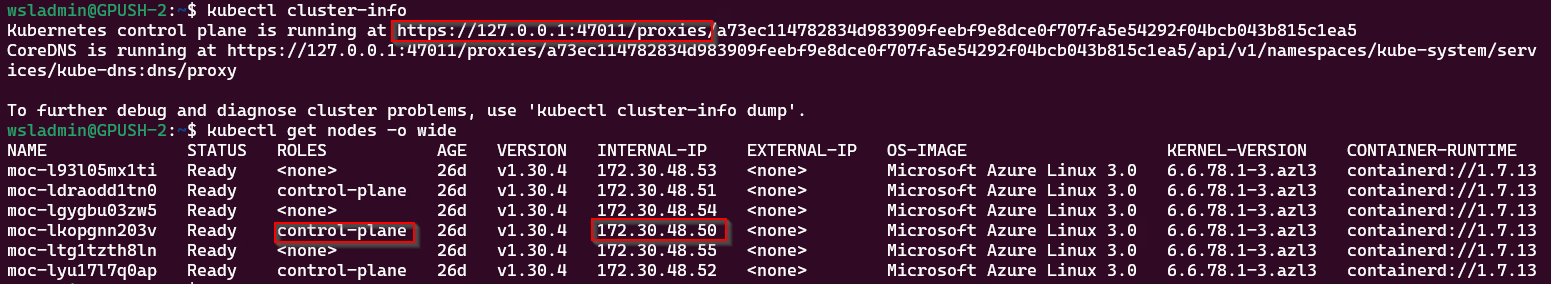

# Prove we're connected to the AKS cluster via the proxy

kubectl cluster-info

# Get the node information and IP addresses

kubectl get nodes -o wideWe can see the cluster is connected via the proxy session and a list of all the cluster nodes. We want to connect to a control-plane node, so select one that has that role and make a note of the internal IP address.

Use the following command to connect to the node:

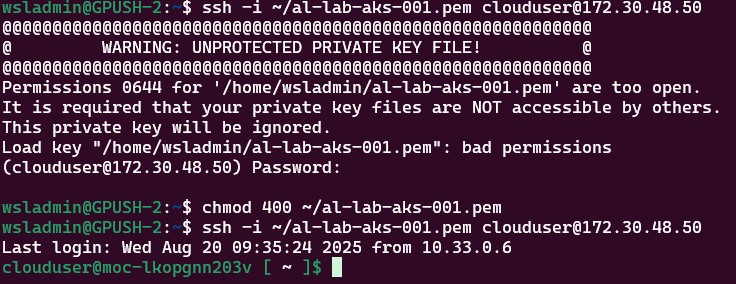

ssh -i <location of ssh private key file> clouduser@<control-plane ip>

# If you get an error regarding permissions to key file being too open, run:

chmod 400 <location of ssh private key file>Now we’re in, we can exit the session as we know that works and use the following command to write the output to a file on your local system:

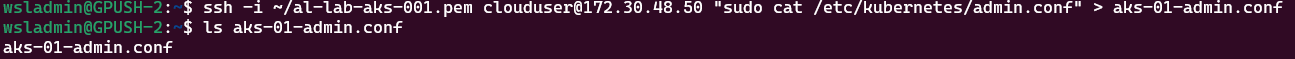

ssh -i <location of ssh private key file> clouduser@<control-plane ip> "sudo cat /etc/kubernetes/admin.conf" > aks-01-admin.confNow we can quit our proxy session and test connectivity using the file retrieved from the node:

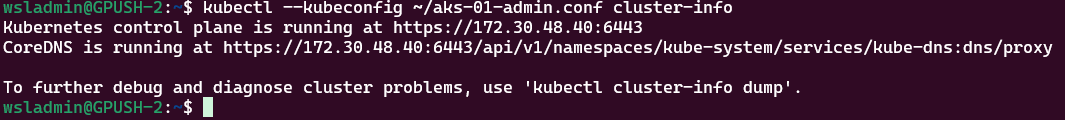

kubectl --kubeconfig ~/aks-01-admin.conf cluster-infoRunning a debug container on one of the control-plane nodes

You can use this method if you don’t have the private SSH key to connect to the node directly.

We need to use make a proxy connection to the cluster first of all to be able to run the kubectl commands

az connectedk8s proxy --name <myResourceGroup> --resource-group <myAKSCLuster>

# Prove we're connected to the AKS cluster via the proxy

kubectl cluster-info

# Get the node information and IP addresses

kubectl get nodes -o wideFrom the node output, we want to get the name of one of the control-plane nodes and use that in the following commands:

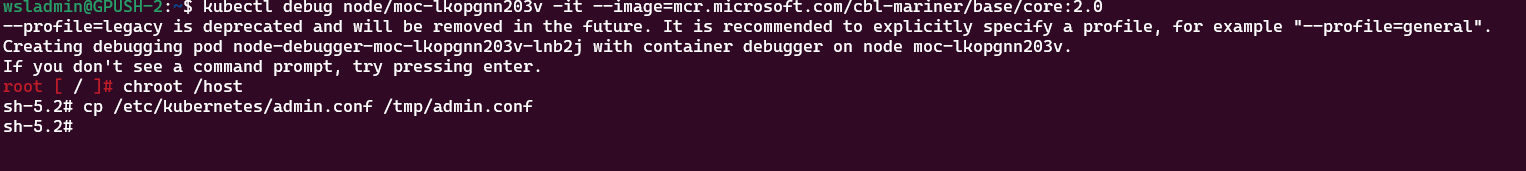

kubectl debug node/control-plane-node -it --image=mcr.microsoft.com/cbl-mariner/base/core:2.0

# use chroot to use the host file system

chroot /host

# copy the file to /tmp

cp /etc/kubernetes/admin.conf /tmp/admin.confKeep the debug session open and from another shell run the following to copy the file from the debug container to your local machine:

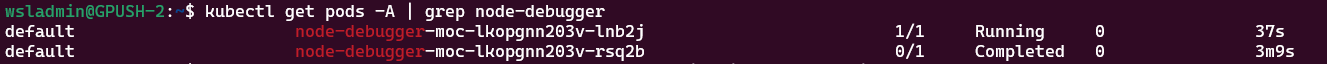

#get the name of the running debug container

kubectl get pods -A | grep node-debugger

#copy the tmp file:

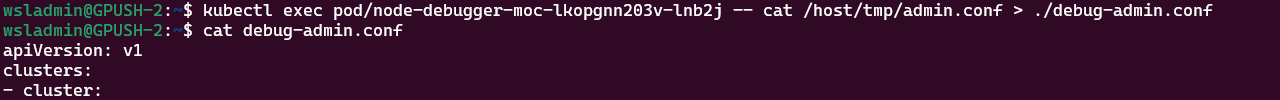

kubectl exec pod/node-debugger-moc-lkopgnn203v-lnb2j -- cat /host/tmp/admin.conf > ./debug-admin.confGo back to the debug shell and exit from it.

Once you’ve finished with the debug container, you can remove the pod from the cluster:

# Get the name of debug pods

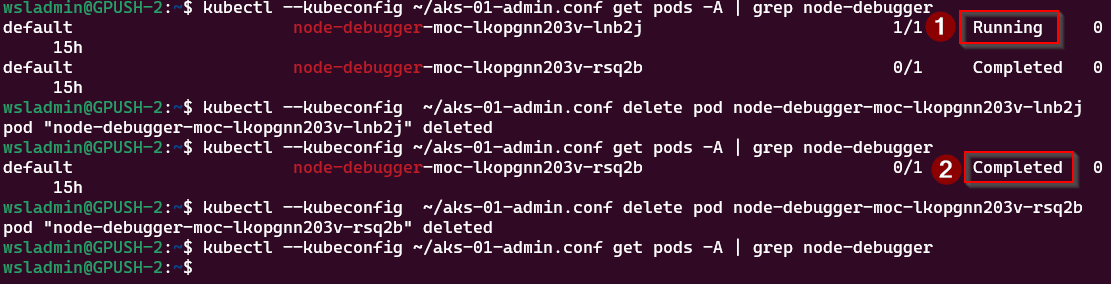

kubectl --kubeconfig ~/aks-01-admin.conf get pods -A | grep node-debugger

# Delete debug pod

kubectl --kubeconfig ~/aks-01-admin.conf delete pod <node-debugger-pod-name>You can see in the screenshot above that I had 2 debug sessions listed, one running and the other completed. The running session is because I didn’t exit from the pod, and went off to do something else (oops!). Not to worry, deleting the pod sorts that out.

Conclusion

Clearly, the easiest way to retrieve the admin credentials is to use method one, use the Azure CLI aksarc extension to retrieve them. I wanted to show the other methods, though, as a reference on how to connect to the control-plane pods and retrieve a file.

Installing MAAS CLI on WSL 2

Installing MAAS CLI on WSL2

This is a short post on how to install MAAS CLI on WSL2 so you can administer your MAAS environments for your Windows system.

If anyone reading this is confused as to what MAAS (Metal-as-a-Service) is, it’s an open source cloud platform from Canonical allowing you to manage bare-metal infrastructure, such as networking and server deployments within your DC. https://maas.io/how-it-works

There are a number of blog post going into deeper depth from my colleague Matthew Quickenden here: https://www.cryingcloud.com/blog/tag/%23MAAS

TL;DR

Here's all the command you need to run:

# Setup repo

MAAS_VERSION=3.5

sudo apt-add-repository ppa:maas/${MAAS_VERSION}

# Install OpenSSL Python module

sudo apt install python3-pip

pip install pyOpenSSL

# Install MAAS CLI

sudo apt install maas-cliInstalling MAAS CLI

Following the official documentation for installing the CLI, it tells you to initially run this command:

sudo apt install maas-cliWhen you attempt via WSL2 for the first time, you're likely to see the following error:

E: Unable to locate package maas-cli

To get around this you need to run the following:

MAAS_VERSION=3.5

sudo apt-add-repository ppa:maas/${MAAS_VERSION}Running sudo apt install maas-cli will give you something similar to this:

Dependent on your setup, you may need to run the following to install the OpenSSL Python module

# Install pip if not available

sudo apt install python3-pip

# Install OpenSSl module

pip install pyOpenSSLIf the module isn't present, you'll get an error like this:

When the pre-reqs are in place running the maas command should return something similar:

Azure Container Storage for Azure Arc Edge Volumes - deploying on Azure Local AKS

Late last year, Microsoft released the latest version of the snappily titled ‘Azure Container Storage enabled by Azure Arc’, (ACSA) which is a solution to make it easier to get data from your container solution to Azure Blob Storage. You can read the overview here, but in essence it’s a pretty configurable allowing you to setup local resilient storage for your container apps, or use for cloud ingest; to send data to Azure and purge once transfer is confirmed.

The purpose of the post is to give and example of the steps needed to get this setup on an Azure Local AKS cluster.

If you have an existing cluster you want to deploy to, take heed of the pre-reqs:

Single-node or 2-node cluster

per node:

- 4 CPUs

- 16 GB RAM

Multi-node cluster

per node:

- 8 CPUs

- 32 GB RAM

16GB RAM should be fine, but in more active scenarios, 32 GB is recommended.

Prepare AKS enabled by Azure Arc cluster

Make sure you have the latest AZ CLI extensions installed.

Azure Arc Kubernetes Extensions Documentation

# Make sure the az extensions are installed

az extension add --name connectedk8s --upgrade

az extension add --name k8s-extension --upgrade

az extension add -n k8s-runtime --upgrade

az extension add --name aksarc --upgrade

# Login to Azure

az login

az account set --subscription <subscription-id>As of time of writing, here are the versions of the extensions:

If you have a virgin cluster, you will need to install the Load Balancer.

# Check you have relevent Graph permissions

az ad sp list --filter "appId eq '087fca6e-4606-4d41-b3f6-5ebdf75b8b4c'" --output json

# If that command returns an empty result, use the alternative method: https://learn.microsoft.com/en-us/azure/aks/aksarc/deploy-load-balancer-cli#option-2-enable-arc-extension-for-metallb-using-az-k8s-extension-add-command

# Enable the extension

RESOURCE_GROUP_NAME="YOUR_RESOURCE_GROUP_NAME" # name of the resource group where the AKS Arc cluster is deployed

CLUSTER_NAME="YOUR_CLUSTER_NAME"

AKS_ARC_CLUSTER_URI=$(az aksarc show --resource-group ${RESOURCE_GROUP_NAME} --name ${CLUSTER_NAME} --query id -o tsv | cut -d'/' -f1-9)

az k8s-runtime load-balancer enable --resource-uri $AKS_ARC_CLUSTER_URI

# Deploy the Load Balancer

LB_NAME="al-lb-01" # must be lowercase, alphanumeric, '-' or '.' (RFC 1123)

IP_RANGE="192.168.1.100-192.168.1.150"

ADVERTISE_MODE="ARP" # Options: ARP, BGP, Both

az k8s-runtime load-balancer create --load-balancer-name $LB_NAME \

--resource-uri $AKS_ARC_CLUSTER_URI \

--addresses $IP_RANGE \

--advertise-mode $ADVERTISE_MODEOpen Service Mesh is used to deliver the ACSA capabilities, so to deploy on the connected AKS cluster, use the following commands:

RESOURCE_GROUP_NAME="YOUR_RESOURCE_GROUP_NAME"

CLUSTER_NAME="YOUR_CLUSTER_NAME"

az k8s-extension create --resource-group $RESOURCE_GROUP_NAME \

--cluster-name $CLUSTER_NAME \

--cluster-type connectedClusters \

--extension-type Microsoft.openservicemesh \

--scope cluster \

--name osm \

--config "osm.osm.featureFlags.enableWASMStats=false" \

--config "osm.osm.enablePermissiveTrafficPolicy=false" \

--config "osm.osm.configResyncInterval=10s" \

--config "osm.osm.osmController.resource.requests.cpu=100m" \

--config "osm.osm.osmBootstrap.resource.requests.cpu=100m" \

--config "osm.osm.injector.resource.requests.cpu=100m"Deploy IoT Operations Dependencies

In the official documentation, it says to deploy the IoT Operations extension, specifically the cert-manager component. It doesn't say if you don't have to deploy if not using Azure IoT Operations, so I deployed anyway.

RESOURCE_GROUP_NAME="YOUR_RESOURCE_GROUP_NAME"

CLUSTER_NAME="YOUR_CLUSTER_NAME"

az k8s-extension create --cluster-name "${CLUSTER_NAME}" \

--name "${CLUSTER_NAME}-certmgr" \

--resource-group "${RESOURCE_GROUP_NAME}" \

--cluster-type connectedClusters \

--extension-type microsoft.iotoperations.platform \

--scope cluster \

--release-namespace cert-managerDeploy the container storage extension

RESOURCE_GROUP_NAME="YOUR_RESOURCE_GROUP_NAME"

CLUSTER_NAME="YOUR_CLUSTER_NAME"

az k8s-extension create --resource-group "${RESOURCE_GROUP_NAME}" \

--cluster-name "${CLUSTER_NAME}" \

--cluster-type connectedClusters \

--name azure-arc-containerstorage \

--extension-type microsoft.arc.containerstorageNow it's time to deploy the edge storage configuration. As my cluster is deployed on Azure Local AKS and is connected to Azure Arc, I went with the Arc config option detailed in the docs.

cat <<EOF > edgeConfig.yaml

apiVersion: arccontainerstorage.azure.net/v1

kind: EdgeStorageConfiguration

metadata:

name: edge-storage-configuration

spec:

defaultDiskStorageClasses:

- "default"

- "local-path"

serviceMesh: "osm"

EOF

kubectl apply -f "edgeConfig.yaml"Once it's deployed, you can list the storage classes available to the cluster:

kubectl get storageclassSetting up cloud ingest volumes

Now we're ready to configure permissions on the Azure Storage Account so that the Edge Volume provider has access to upload data to the blob container.

You can use the script below to get the extension identity and then assign the necessary role to the storage account:

RESOURCE_GROUP_NAME="YOUR_RESOURCE_GROUP_NAME"

CLUSTER_NAME="YOUR_CLUSTER_NAME"

export EXTENSION_TYPE=${1:-"microsoft.arc.containerstorage"}

EXTENSION_IDENTITY_PRINCIPAL_ID=$(az k8s-extension list \

--cluster-name ${CLUSTER_NAME} \

--resource-group ${RESOURCE_GROUP_NAME} \

--cluster-type connectedClusters \

| jq --arg extType ${EXTENSION_TYPE} 'map(select(.extensionType == $extType)) | .[] | .identity.principalId' -r)

STORAGE_ACCOUNT_NAME="YOUR_STORAGE_ACCOUNT_NAME"

STORAGE_ACCOUNT_RESOURCE_GROUP="YOUR_STORAGE_ACCOUNT_RESOURCE_GROUP"

STORAGE_ACCOUNT_ID=$(az storage account show --name ${STORAGE_ACCOUNT_NAME} --resource-group ${STORAGE_ACCOUNT_RESOURCE_GROUP} --query id --output tsv)

az role assignment create --assignee ${EXTENSION_IDENTITY_PRINCIPAL_ID} --role "Storage Blob Data Contributor" --scope ${STORAGE_ACCOUNT_ID}Create a deployment to test the cloud ingest volume

Now we can test transferring data from edge to cloud.I'm using the demo from Azure Arc Jumpstart: Deploy demo from Azure Arc Jumpstart

First off, create a container on the storage account to store the data from the edge volume.

export STORAGE_ACCOUNT_NAME="YOUR_STORAGE_ACCOUNT_NAME"

export STORAGE_ACCOUNT_CONTAINER="fault-detection"

STORAGE_ACCOUNT_RESOURCE_GROUP="YOUR_STORAGE_ACCOUNT_RESOURCE_GROUP"

az storage container create --name ${STORAGE_ACCOUNT_CONTAINER} --account-name ${STORAGE_ACCOUNT_NAME} --resource-group ${STORAGE_ACCOUNT_RESOURCE_GROUP}Next, create a file called acsa-deployment.yaml using the following content:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

### Create a name for your PVC ###

name: acsa-pvc

### Use a namespace that matched your intended consuming pod, or "default" ###

namespace: default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: cloud-backed-sc

---

apiVersion: "arccontainerstorage.azure.net/v1"

kind: EdgeSubvolume

metadata:

name: faultdata

spec:

edgevolume: acsa-pvc

path: faultdata # If you change this path, line 33 in deploymentExample.yaml must be updated. Don't use a preceding slash.

auth:

authType: MANAGED_IDENTITY

storageaccountendpoint: "https://${STORAGE_ACCOUNT_NAME}.blob.core.windows.net/"

container: ${STORAGE_ACCOUNT_CONTAINER}

ingestPolicy: edgeingestpolicy-default # Optional: See the following instructions if you want to update the ingestPolicy with your own configuration

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: acsa-webserver

spec:

replicas: 1

selector:

matchLabels:

app: acsa-webserver

template:

metadata:

labels:

app: acsa-webserver

spec:

containers:

- name: acsa-webserver

image: mcr.microsoft.com/jumpstart/scenarios/acsa_ai_webserver:1.0.0

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "200m"

memory: "256Mi"

ports:

- containerPort: 8000

env:

- name: RTSP_URL

value: rtsp://virtual-rtsp:8554/stream

- name: LOCAL_STORAGE

value: /app/acsa_storage/faultdata

volumeMounts:

### This name must match the volumes.name attribute below ###

- name: blob

### This mountPath is where the PVC will be attached to the pod's filesystem ###

mountPath: "/app/acsa_storage"

volumes:

### User-defined 'name' that will be used to link the volumeMounts. This name must match volumeMounts.name as specified above. ###

- name: blob

persistentVolumeClaim:

### This claimName must refer to the PVC resource 'name' as defined in the PVC config. This name will match what your PVC resource was actually named. ###

claimName: acsa-pvc

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: virtual-rtsp

spec:

replicas: 1

selector:

matchLabels:

app: virtual-rtsp

minReadySeconds: 10

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

template:

metadata:

labels:

app: virtual-rtsp

spec:

initContainers:

- name: init-samples

image: busybox

resources:

limits:

cpu: "200m"

memory: "256Mi"

requests:

cpu: "100m"

memory: "128Mi"

command:

- wget

- "-O"

- "/samples/bolt-detection.mp4"

- https://github.com/ldabas-msft/jumpstart-resources/raw/main/bolt-detection.mp4

volumeMounts:

- name: tmp-samples

mountPath: /samples

containers:

- name: virtual-rtsp

image: "kerberos/virtual-rtsp"

resources:

limits:

cpu: "500m"

memory: "512Mi"

requests:

cpu: "200m"

memory: "256Mi"

imagePullPolicy: Always

ports:

- containerPort: 8554

env:

- name: SOURCE_URL

value: "file:///samples/bolt-detection.mp4"

volumeMounts:

- name: tmp-samples

mountPath: /samples

volumes:

- name: tmp-samples

emptyDir: { }

---

apiVersion: v1

kind: Service

metadata:

name: virtual-rtsp

labels:

app: virtual-rtsp

spec:

type: LoadBalancer

ports:

- port: 8554

targetPort: 8554

name: rtsp

protocol: TCP

selector:

app: virtual-rtsp

---

apiVersion: v1

kind: Service

metadata:

name: acsa-webserver-svc

labels:

app: acsa-webserver

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8000

protocol: TCP

selector:

app: acsa-webserverOnce created, apply the deployment :

export STORAGE_ACCOUNT_NAME="YOUR_STORAGE_ACCOUNT_NAME" # we need to export the storage account name so envsubst can substitute it

envsubst < acsa-deployment.yaml | kubectl apply -f -[!NOTE]

This will deploy in to the default namespace.

This will create the deployment and the volumes, substituting the values for the storage account name with the variables previously set.

If you want to check the status of the edge volume, such as if it's connected or how many files are in the queue, you can use the following command:

# List the edge subvolumes

kubectl get edgesubvolume

kubectl describe edgesubvolume faultdataTesting

Assuming everything has deployed without errors, you should be able to access the web server at the IP address of the webserver. You can find the IP address by running:

kubectl get svc acsa-webserver-svcObtain the EXTERNAL-IP and port (should be 80) and use that to access the web server.

take a look at the edgevolume for metrics:

kubectl get edgesubvolumetake a look at the edgevolume for metrics:

kubectl get edgesubvolumeAnd that’s how simple (?!) it is to setup. As long as you’ve met the pre-reqs and set permissions properly, it’s pretty smooth to implement.

HCI Box on a Budget. Leverage Azure Spot & Hyrbrid Use Benefits. Up to 93% savings.

Do you want to take HCI Box for a test drive but dont have $2,681 in the budget? Me either. How about the same box for $178?

This is the price for 730 hours

Following general instructions from jumpstart Azure Arc Jumpstart

once you have the git repo, edit the host.bicep file

...\azure_arc\azure_jumpstart_hcibox\bicep\host\host.bicep

add to the properties for the host virtualMachine the resource vm 'Microsoft.Compute/virtualMachines@2022-03-01'

priority: 'Spot'

evictionPolicy: 'Deallocate'

billingProfile: {

maxPrice: -1

}

You can review difference regions for either cheaper price per hour or lower eviction rate

0.24393 per hour * 730 hours = $178

If you are elegable for Hybrid Use Benefits through you EA or have licenses you can also enable HUB in the Bicep template under virtual machine properties

licenseType: 'Windows_Server'

Code changes

...

resource vm 'Microsoft.Compute/virtualMachines@2022-03-01' = {

name: vmName

location: location

tags: resourceTags

properties: {

licenseType: 'Windows_Server'

priority: 'Spot'

evictionPolicy: 'Deallocate'

billingProfile: {

maxPrice: -1

}

...

Good luck, enjoy HCI’ing

Importing Root CA to Azure Stack Linux VM at provisioning time.

Deploying Linux VMs on Azure Stack Hub with Enterprise CA Certificates? Here's a Solution!

When deploying Linux VMs on Azure Stack Hub in a corporate environment, you may encounter issues with TLS endpoint certificates signed by an internal Enterprise CA. In this post, we'll explore a technique for importing the root CA certificate into the truststore of your Linux VMs, enabling seamless access to TLS endpoints. We'll also show you how to use Terraform to automate the process, including provisioning a VM, importing the CA certificate, and running a custom script

In a loose continuation of my previous post on using Terraform with Azure Stack Hub, I describe a technique for those deployingLinux VM's in an envirionment where an Enterprise CA has been used to sign the endpoint SSL certs.

Problem statement

Normally, when using a trusted thrid party Certificate Authority to sign the TLS endpoint certs, the root/intermediate/signing Cert Authority public certificate is usually already available in the CA truststore, so you don't have to add them manually. This means you should be able to access TLS enpoints (https sites) without errors being thrown.

As Azure Stack Hub is typically deployed in corporate environments, many use an internal Enterprise CA or self-signed CA to create the mandatory certificates for the public endpoints. The devices accessing services hosted on ASH should have the internal enterprise root CA public cert in the local trusted cert store, so there will be no problems from the client side.

The problem, however is if you want to deploy Marketplace VM's (e.g. you've downloaded Marketplace items), they won't have your signing root CA in the truststore. This is an issue for automation as typically the install script is uploaded to a Storage Account, which the Azure Linux VM Agent obtains and then runs. If the storage account endpoint TLS certificate is untrusted, an error is thrown and you can't run your script :(

Importing the root CA into the truststore

If you're building VM's, there are two options to ensure that the internal root CA is baked into the OS at provisioning time:

- create a custom image and publish it to the marketplace. Import the root CA and use the generalized VHD as the base OS disk.

- run a command at buildtime to import the root CA

The first option is quite involved, so I prefer the second option :) Thankfully, the command can be distilled to a one-liner:

sudo cp /var/lib/waagent/Certificates.pem /usr/local/share/ca-certificates/ash-ca-trust.crt && sudo update-ca-certificatessudo cp /var/lib/waagent/Certificates.pem /etc/pki/ca-trust/source/anchors/ && sudo update-ca-trustThe Azure Linux VM Agent has a copy of the rootCA in the waagent directory, hence making the one-liner possible.

Using Terraform

So, we want to provision a VM, and then run a script once the VM is up and running to configure it. We need to import the root CA before we can get download the script and run it. It's all fairly straightforward, but we do have one consideration to make.

Using the azurestack_virtual_machine_extension resource, we need to define the publisher and type. Typically this would be:

publisher = "Microsoft.Azure.Extensions"

type = "CustomScript"

type_handler_version = "2.0"This would allow us to run a command (we don't have to use a script!):

settings = <<SETTINGS

{

"commandToExecute": "sudo cp /var/lib/waagent/Certificates.pem /etc/pki/ca-trust/source/anchors/ && sudo update-ca-trust"

}

SETTINGSIn theory, we can deploy another vm extension which has a dependency on the CA import resource being completed.

That is correct, but we are restricted to deploying only one CustomScript extension type per VM, otherwise when running terraform plan it will fail telling us so.

We need to find an alternative type which will achieve the same objective.

Here's where we can use the CustomScriptForLinux. It's essentially the same as the CustomScript type, but it allows us to get around the restriction.

Here's how it would look:

locals {

vm_name = "example-machine"

storage_account_name = "assets"

}

resource "azurestack_resource_group" "example" {

name = "example-resources"

location = "West Europe"

}

resource "azurestack_virtual_network" "example" {

name = "example-network"

address_space = ["10.0.0.0/16"]

location = azurestack_resource_group.example.location

resource_group_name = azurestack_resource_group.example.name

}

resource "azurestack_subnet" "example" {

name = "internal"

resource_group_name = azurestack_resource_group.example.name

virtual_network_name = azurestack_virtual_network.example.name

address_prefix = ["10.0.2.0/24"]

}

resource "azurestack_network_interface" "example" {

name = "example-nic"

location = azurestack_resource_group.example.location

resource_group_name = azurestack_resource_group.example.name

ip_configuration {

name = "internal"

subnet_id = azurestack_subnet.example.id

private_ip_address_allocation = "Dynamic"

}

}

resource "tls_private_key" "ssh_key" {

algorithm = "RSA"

rsa_bits = 4096

}

# Storage account to store the custom script

resource "azurestack_storage_account" "vm_sa" {

name = local.storage_account_name

resource_group_name = azurestack_resource_group.example.name

location = azurestack_resource_group.example.location

account_tier = "Standard"

account_replication_type = "LRS"

}

# the container to store the custom script

resource "azurestack_storage_container" "assets" {

name = "assets"

storage_account_name = azurestack_storage_account.vm_sa.name

container_access_type = "private"

}

# upload the script to the storage account (located in same dir as the main.tf)

resource "azurestack_storage_blob" "host_vm_install" {

name = "install_host_vm.sh"

storage_account_name = azurestack_storage_account.vm_sa.name

storage_container_name = azurestack_storage_container.assets.name

type = "Block"

source = "install_host_vm.sh"

}

# Create the VM

resource "azurestack_virtual_machine" "example" {

name = "example-machine"

resource_group_name = azurestack_resource_group.example.name

location = azurestack_resource_group.example.location

vm_size = "Standard_F2"

network_interface_ids = [

azurestack_network_interface.example.id,

]

os_profile {

computer_name = local.vm_name

admin_username = "adminuser"

}

os_profile_linux_config {

disable_password_authentication = true

ssh_keys {

path = "/home/adminuser/.ssh/authorized_keys"

key_data = tls_private_key.pk.public_key_openssh

}

}

storage_image_reference {

publisher = "Canonical"

offer = "0001-com-ubuntu-server-jammy"

sku = "22_04-lts"

version = "latest"

}

storage_os_disk {

name = "${local.vm_name}-osdisk"

create_option = "FromImage"

caching = "ReadWrite"

managed_disk_type = "Standard_LRS"

os_type = "Linux"

disk_size_gb = 60

}

}

# import the CA certificate to truststore

resource "azurestack_virtual_machine_extension" "import_ca_bundle" {

name = "import_ca_bundle"

virtual_machine_id = azurestack_virtual_machine.vm.id

publisher = "Microsoft.Azure.Extensions"

type = "CustomScriptForLinux"

type_handler_version = "2.0"

depends_on = [

azurestack_virtual_machine.vm

]

protected_settings = <<PROTECTED_SETTINGS

{

"commandToExecute": "sudo /var/lib/waagent/Certificates.pem /usr/local/share/ca-certificates/ash-ca-trust.crt && sudo update-ca-certificates"

}

PROTECTED_SETTINGS

}

# install the custom script using different extension type

resource "azurestack_virtual_machine_extension" "install_vm_config" {

name = "install_vm_config"

virtual_machine_id = azurestack_virtual_machine.vm.id

publisher = "Microsoft.Azure.Extensions"

type = "CustomScript"

type_handler_version = "2.0"

depends_on = [

azurestack_virtual_machine_extension.import_ca_bundle

]

settings = <<SETTINGS

{

"fileUris": "${azurestack_storage_blob.host_vm_install.id}"

}

SETTINGS

protected_settings = <<PROTECTED_SETTINGS

{

"storageAccountName": "${azurestack_storage_account.vm_sa.name}",

"storageAccountKey": "${azurestack_storage_account.vm_sa.primary_access_key}"

"commandToExecute": "bash install.sh"

}

PROTECTED_SETTINGS

}Thanks for reading and I hope it's given some inspiration!

Topic Search

Posts by Date

- August 2025 1

- March 2025 1

- February 2025 1

- October 2024 1

- August 2024 1

- July 2024 1

- October 2023 1

- September 2023 1

- August 2023 3

- July 2023 1

- June 2023 2

- May 2023 1

- February 2023 3

- January 2023 1

- December 2022 1

- November 2022 3

- October 2022 7

- September 2022 2

- August 2022 4

- July 2022 1

- February 2022 2

- January 2022 1

- October 2021 1

- June 2021 2

- February 2021 1

- December 2020 2

- November 2020 2

- October 2020 1

- September 2020 1

- August 2020 1

- June 2020 1

- May 2020 2

- March 2020 1

- January 2020 2

- December 2019 2

- November 2019 1

- October 2019 7

- June 2019 2

- March 2019 2

- February 2019 1

- December 2018 3

- November 2018 1

- October 2018 4

- September 2018 6

- August 2018 1

- June 2018 1

- April 2018 2

- March 2018 1

- February 2018 3

- January 2018 2

- August 2017 5

- June 2017 2

- May 2017 3

- March 2017 4

- February 2017 4

- December 2016 1

- November 2016 3

- October 2016 3

- September 2016 5

- August 2016 11

- July 2016 13