As I’ve been deploying and testing AKS on Azure Stack HCI, I wanted to test the deployment and management of Azure Arc enabled data services running on one of my clusters.

This post is part one of a series that documents what I did to setup the tools and deploy a data controller. In other posts, I’ll detail deploying a PostgreSQL instance and how to upload metrics and usage data to Azure.

Part 1 discusses installation of the tools required to deploy and manage the data controller.

Part 2 describes how to deploy and manage a PostgreSQL hyperscale instance.

Part 3 describes how we can monitor our instances from Azure.

Hopefully it will give someone some insight into what’s involved to get you started.

First things first, I’ll make the assumption that you either have an Azure Stack HCI cluster with AKS running as that is the setup I have. If you have another K8s cluster, the steps should be easy enough to follow and adapt :) .

Install the tools

First things first, we need to set up the tools. As I’m on Windows 10, the instructions here are geared towards Windows, but I will link to the official documentation for other OS’.

- Install Azure Data CLI (azdata)

- Run the Windows Installer to deploy.

- Official documentation

- Install Azure Data Studio

- Run the Windows Installer to deploy.

- Official documentation

Install Azure CLI

- Install using the the following PowerShell command:

Invoke-WebRequest -Uri https://aka.ms/installazurecliwindows -OutFile .\AzureCLI.msi; Start-Process msiexec.exe -Wait -ArgumentList '/I AzureCLI.msi /quiet'; rm .\AzureCLI.msi - Official documentation

- Install using the the following PowerShell command:

Install Kubernetes CLI (kubectl)

- Install using the the following PowerShell command:

Install-Script -Name 'install-kubectl' -Scope CurrentUser -Force install-kubectl.ps1 [-DownloadLocation <path>] - Official documentation

- Install using the the following PowerShell command:

Once you’ve installed the tools above, go ahead and run Azure Data Studio - we need to install some additional extensions before we can go ahead and deploy a data controller.

Open the Extensions pane, and install Azure Arc and Azure Data CLI as per the screenshot below.

Deploying the data controller

Once the extensions are installed, you’re ready to deploy a data controller, which is required before you can deploy the PostgreSQL or SQL DB instances within your K8s cluster.

Open the Connections pane, click the ellipsis and select New Deployment:

From the new window, select Azure Arc data controller (preview) and then click Select.

This will bring up the Create Azure Arc data controller install steps. Step 1 is to choose the kubeconfig file for your cluster. If you’re running AKS HCI, check out my previous post on managing AKS HCI clusters from Windows 10; it includes the steps required to retrieve the kubeconfig files for your clusters.

Step 2 is where you choose the config profile. Make sure azure-arc-aks-hci is selected, then click Next.

Step 3 is where we specify which Azure Account, Subscription and Resource Group we want to associate the data controller with.

Within the Data controller details, I specified the ‘default’ values:

| Parameter | Value |

|---|---|

| Data controller namespace | arc |

| Data controller name | arc-dc |

| Storage Class | default |

| Location | East US |

I’ve highlighted Storage class, as when selecting the dropdown, it is blank. I manually typed in default. This is a bug in the extension and causes an issue in a later step, but it can be fixed :)

I’ve highlighted the Storage class, as when selecting the dropdown, it is blank. I manually typed in default. This is a bug in the extension and causes an issue in a later step, but it can be fixed :)

Click Next to proceed.

Step 4 generates a Jupyter notebook with the generated scripts to deploy our data controller. If it’s the first time it has been run, then some pre-reqs are required. The first of these is to configure the Python Runtime.

I went with the defaults; click Next to install.

Once that’s in place, next is to install Jupyter. There are no options, just click on Install.

Once Jupyter has been deployed, try clicking Run all to see what happens. You’ll probably find it errors, like below:

I’ve highlighted the problem - the Pandas module is not present. This is simple enough to fix.

From within the notebook, click on the Manage Packages icon.

Go to Add new and type in pandas into the search box. Click on install to have Pip install it.

In the Tasks window, you’ll see when it has been successfully deployed

With the pandas module installed, try running the notebook again. You might find that you get another error pretty soon.

This time, the error indicates that there is a problem with the numpy module that’s installed. The issue is that on Windows, there is a problem with the latest implementation, so to get around it, choose an older version of the module.

Click on Manage Packages as we did when installing the pandas module.

Go to Add new and type in numpy into the search box. Select Package Version 1.18.5 . Click on install to have Pip install it.

You may also see some warnings regarding the version of pip, you can use the same method as above to get the latest version.

OK, once all that is done, run the notebook again. I found that yet another error was thrown. Remember when I said there was a bug when setting the Storage Class? Well, it looks like even though I manually specified it as ‘default’ it didn’t set the variable, as can be seen in the output below.

The -sc parameter is not set. Not to worry, we can change this in the set variables section of the notebook:

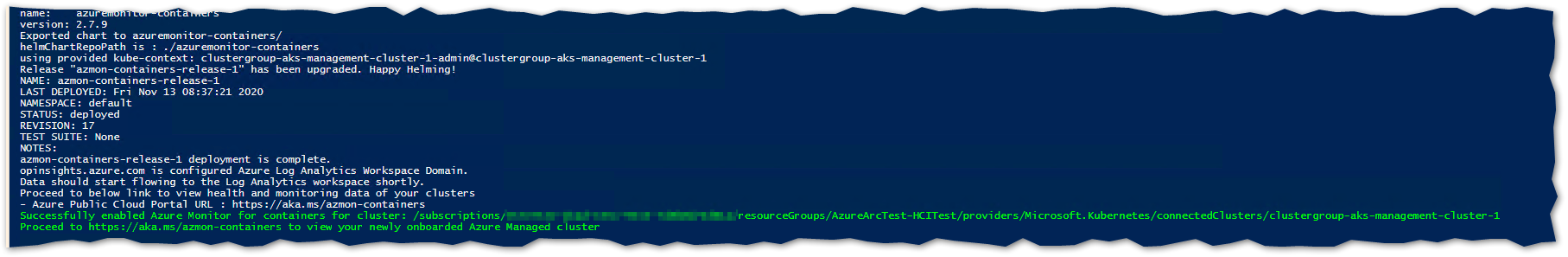

arc_data_controller_storage_class = 'default'And again, Run all again and when the Create Azure Arc Data Controller cell is run, you’ll notice in the output the parameter is correctly set this time around.

From here on, there shouldn’t be any problems and the data controller deployment should complete successfully. Make a note of the data controller endpoint URL, as you’ll need this for the next step.

Connect to the Controller

Now that the data controller has been deployed, we need to connect to it from within ADC.

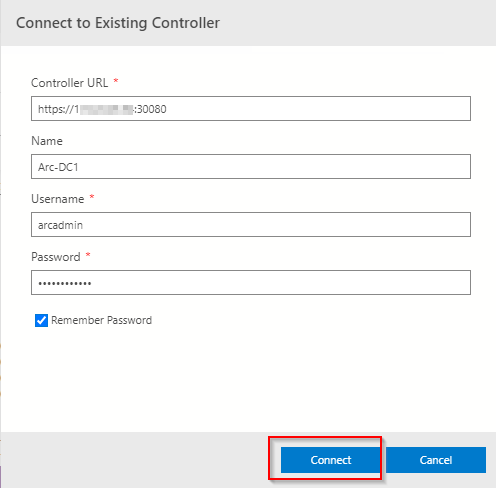

From the Connection pane, expand Azure Arc Controllers and click Connect Controller.

Within the Connect to Existing Controller pane, enter the Controller URL recorded from the previous step, Name, Username and password that were specified when setting up the data controller.

All being good, you’ll now see the entry in the connections pane.

As you can see, there were a few things I had to workaround, but as this is a Preview product, it doesn’t bother me as it means I learn more about what is going on under the covers by getting it to work. I’m sure that by the time it is GA, the issues will be resolved.